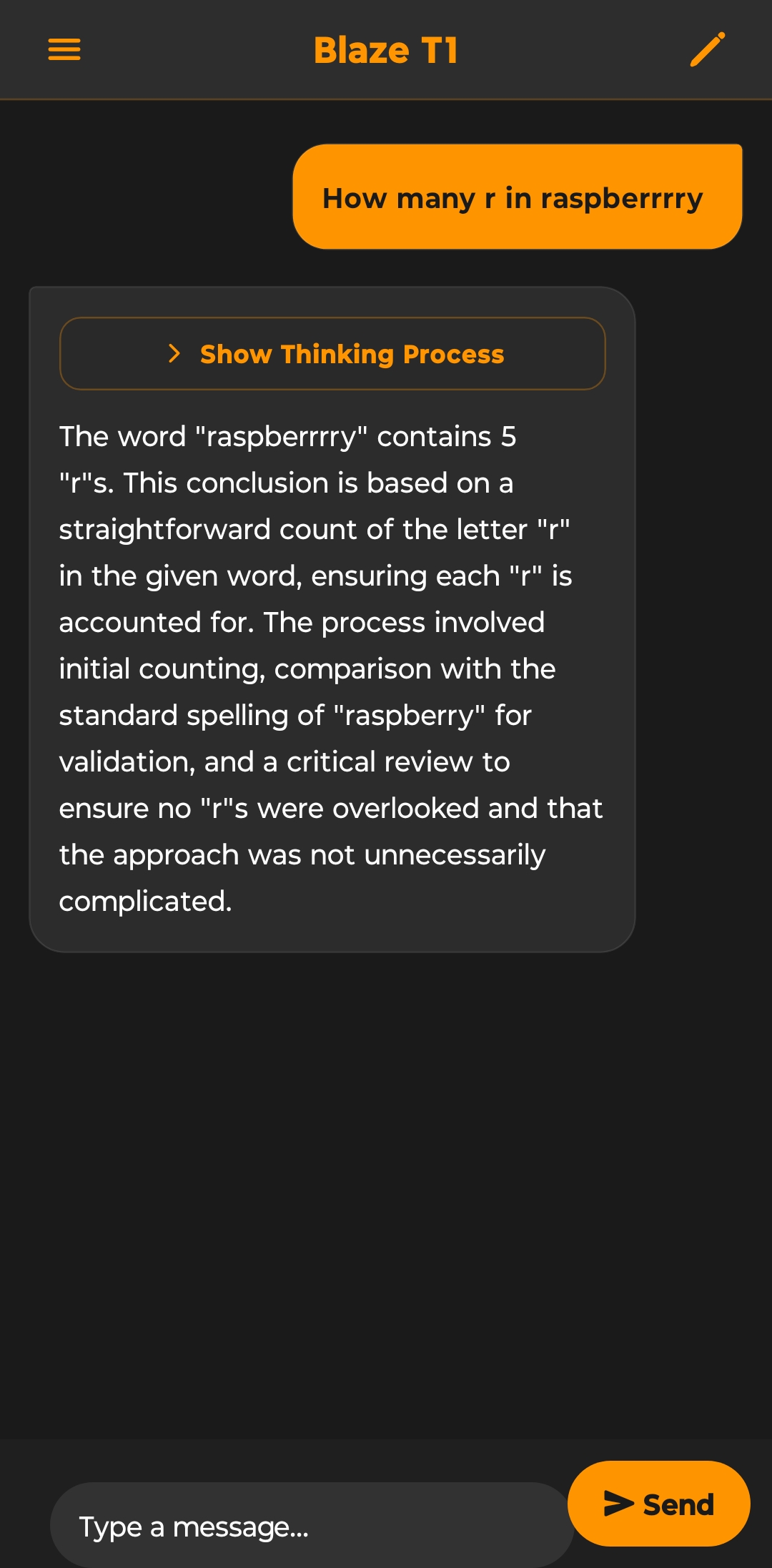

Refrain from any casual conversation, this model is purely and purely reasoning without any extra tools , 7B model , focus fully on inferencing time, so it will struggle to do basic calculations, but it knows how many r in raspberrrry or any combination of words or tasks that require reasoning

Nova

Make a chat using llama Cerberes ai Extract all needed info from here : Here are all the details you need to create a chat with the AI using the Cerebras API: ### API Configuration ```javascript co...

Comments (2)

Refrain from any casual conversation, this model is purely and purely reasoning without any extra tools , 7B model , focus fully on inferencing time, so it will struggle to do basic calculations, but it knows how many r in raspberrrry or any combination of words or tasks that require reasoning

This one is a bit Dumber from the last one cause I had to inject it with stuff to not be thinking for like 8000+ tokens straight, this works with a double layered system where one model purely thinks , other on purely uses the reasoning to answer questions , so the name 2 layer GPT , it's really really long chain of structure I made cause I was bored and trying to see how close I can get to reasoning model level intelligence without reasoning model level costs